Introduction: The Paradox of Powerful AI

We live in a paradoxical age of artificial intelligence. Today's most advanced Large Language Models (LLMs) can write elegant poetry, generate complex computer code, and even pass professional exams. Yet, ask that same powerful AI to solve a seemingly simple multi-step puzzle, and it might get stuck in a loop, propose impossible actions, or "hallucinate" a solution that breaks the rules of the game. This gap between their vast knowledge and their poor planning skills is one of the biggest challenges in AI today.

These common failure modes highlight a key weakness: while LLMs can often perform individual reasoning steps correctly, they struggle to autonomously coordinate these steps to achieve a specific goal. They lack the robust executive function that allows humans to break down a problem, monitor progress, and correct course when they make a mistake.

To address this, a team of researchers is turning to the most sophisticated planning machine we know: the human brain. They've developed a new AI architecture that mimics the component processes of our brain's prefrontal cortex—the region responsible for planning and decision-making. The results suggest a fundamental shift in thinking: what if the key to better AI isn't a single, larger brain, but a smarter team of specialists working in concert?

AI Planning Gets a Brain-Inspired Upgrade

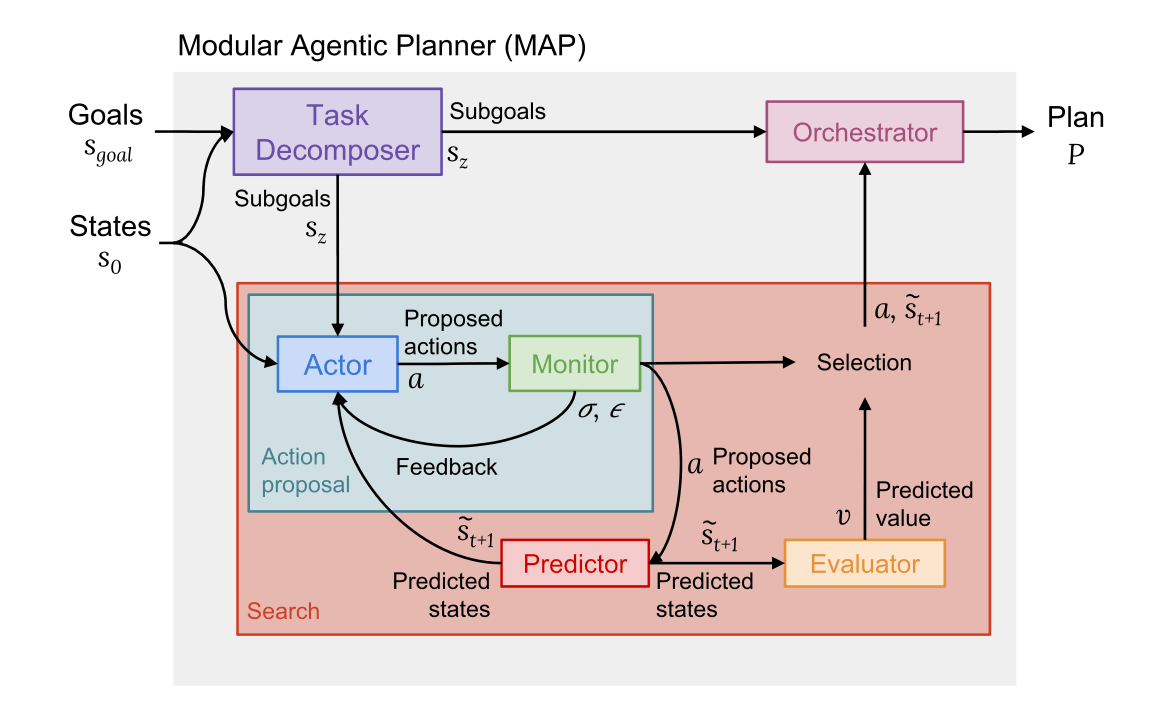

The core of this new approach is a system called the Modular Agentic Planner (MAP). Instead of using a single, monolithic LLM to handle every aspect of a problem, MAP assigns different LLM instances to specialized roles, much like how distinct regions of the brain's prefrontal cortex (PFC) handle different executive functions. It treats planning as a team sport, not a solo performance.

The MAP architecture is composed of six primary modules, each with a cognitive neuroscience parallel:

The Task Decomposer and Orchestrator act like the anterior PFC, which is responsible for high-level goal setting and coordination. They break down a final goal into manageable subgoals and determine when each has been achieved.

The Actor proposes potential actions to achieve the current goal, analogous to the dorsolateral PFC's role in top-down decision-making.

The Monitor acts as the brain's Anterior Cingulate Cortex, the crucial error-detection circuit that fires when you realize you've made a mistake. It assesses the Actor's proposed actions to see if they violate the task's rules.

The Predictor and Evaluator function like the Orbitofrontal Cortex, which predicts future states and estimates their value. They look ahead to see the likely outcome of an action and judge how helpful that outcome will be.

By dividing the labor of planning among these brain-inspired specialists, MAP creates a more structured and reliable reasoning process.

The Secret is a Built-in "Rule-Checker"

One of the most significant weaknesses of standard LLMs is their tendency to propose actions that are invalid or break the rules of a problem. They might suggest moving a game piece to an impossible location or traversing a path between two rooms that aren't connected. The MAP architecture addresses this head-on with its Monitor module.

The importance of this dedicated "rule-checker" is starkly illustrated in an experiment using the classic Tower of Hanoi puzzle. When researchers ran MAP without its Monitor module, the system’s performance plummeted. The percentage of solved problems crashed from 74% down to just 27%, while the number of invalid moves it proposed shot up to 31%. With the Monitor active, the full MAP system proposed zero invalid moves.

This demonstrates that having a dedicated internal referee—whose sole job is to enforce the rules—is far more effective than simply hoping a single LLM will remember and correctly apply the constraints at every single step. This finding is supported by a key observation from the researchers:

Momennejad et al. noted that LLMs often attempt to traverse invalid or hallucinated paths in planning problems (e.g., to move between rooms that are not connected), even though they can correctly identify these paths as invalid when probed separately.

A Smarter Architecture Outperforms a Bigger Model

Perhaps one of the most surprising and impactful findings is that a superior architecture can be more important than the raw power of the underlying AI model. The researchers tested MAP not only with the top-tier GPT-4 but also with a smaller, more cost-efficient model, Llama3-70B.

The results were remarkable. When implemented with the smaller Llama3-70B model, MAP didn't just outperform other methods using the same model—it also beat the best-performing GPT-4 baseline (a method called GPT-4 ICL) on the 3-disk Tower of Hanoi problem. MAP using Llama3-70B solved 50% of the problems, while the baseline using the more powerful GPT-4 only solved 46%.

This finding carries significant implications for the future of AI development. It suggests that for complex planning, a well-designed system of interacting components can be a more efficient path to better performance than simply scaling up model size, which has major consequences for the computational and financial costs of building powerful AI.

The System Generalizes Planning Skills to New Problems

A true sign of intelligence isn't just solving a known problem but applying learned skills to new, unfamiliar situations. The study's transfer experiments showed that MAP excels here as well. The system demonstrated a superior ability to generalize its planning capabilities to tasks it hadn't seen before.

For instance, researchers tested whether skills learned on a simple "blocksworld" task (stacking blocks) could be transferred to a more confusing "mystery blocksworld" task, which the source describes as involving "objects and actions with meaningless or confusing names." This setup prevents the AI from relying on semantic cues and forces it to use abstract reasoning. While other approaches struggled to adapt, MAP's modular structure allowed it to apply the underlying skill of planning more flexibly, achieving a 12.2% success rate compared to less than 2% for other methods.

This indicates that the brain-inspired architecture isn't just memorizing solutions. Instead, it appears to be learning a more robust and abstract capability for planning that can be generalized to novel challenges.

Conclusion: The Future of AI Might Be a Team of Specialists

The Modular Agentic Planner offers a compelling new direction for AI development. It demonstrates that by breaking down the complex process of planning into specialized, interacting functions—inspired by the architecture of the human brain—we can overcome some of the most stubborn limitations of today's powerful but flawed LLMs. The success of this modular approach suggests that the future of intelligent systems may lie not in building one giant, monolithic AI, but in creating teams of specialized agents that work together in a coordinated, brain-like fashion.

This research leaves us with a tantalizing question for the future of AI. If creating a "prefrontal cortex" for AI can help solve its planning problem, what other parts of the human mind could we model to build truly intelligent systems?

Let’s connect